Discuss your challenges with our solutions experts

How Wood Mackenzie is using a data lakehouse to inform a sustainable future

Driving clients’ sustainability ambitions with a truly integrated data solution.

6 minute read

By Yanyan Wu, Vice President of Data and Data Analytics, Chao Yang, Director of Data, Hugh Hopewell, Director of Data, Meng Zhang, Principal Data Scientist and Bernard Ajiboye, Senior Manager.

Climate change is an ongoing and constantly evolving threat, not only for the world as a whole but for individual businesses as well. To rise to the challenge, firms need to develop future-flexible solutions that improve and adapt, no matter where or how a business is reimagining its commitment to sustainability.

At Wood Mackenzie, we provide research and consultancy services to customers of every size across the natural resources industry, from oil and gas to chemicals, metals and power and renewables. As sustainability takes the spotlight in every market, we are focusing on supporting and enabling our clients to embrace the new practices and technologies that can make the most valuable impact on the future of our planet.

We provide data and data insights that help our clients understand how individual sectors are evolving and accelerating, enabling smarter decision making as they establish a footprint, expand into new markets and evolve. That means managing a large amount of data from a huge variety of sources. Our new data pipeline for US unconventional oil and gas data is a great example of how we are tackling this challenge.

The problem: mounting complexity, dwindling transparency

Our Lower 48 data is used by our clients to address critical business challenges. But with diverse sources generating huge amounts of complex data, we needed a new, integrated data solution that would meet these key requirements:

- Scalability without compromising quality

- Sufficient robustness to easily ingest and orchestrate vast quantities of information without resulting in incomplete outputs that might lead to ineffective decisions

- A transparent platform to encourage collaboration throughout the analytics lifecycle, including data injection and processing, real-time dashboarding, building machine learning models and model management

Our previous system gave us limited scope to enhance operational speed and scalability. This would have started to drag down our ability to provide industry leading data and insights to our clients. Our customers rely on us to process data in a timely fashion while providing accessible, accurate and appropriate outputs. These enable better understanding of the aspects and performance of assets, in turn allowing our clients to make smarter investments. Yet the tools we were depending on were becoming slow, incomplete and unreliable.

In the past, we used a traditional SQL database. But because the data we deal with easily runs into billions of rows (structured and unstructured), that old system just was not going to scale. Already it was getting difficult to audit and creating the following challenges:

- It was becoming harder to know how much data we had

- Clarity on how the data was linked together became ever more challenging

- Performance was very slow

- Analysts were using their own scripts, which were not transparent to anyone else

- Siloed coding and a lack of collaboration slowed down data delivery and made it impossible to scale operations effectively

- Continuous memory issues required weekly server restarts, with subsequent disruptions and inefficiencies repeatedly delaying timelines

Facing growing operational instability, we at first tried similar open-source Hadoop solutions. Unfortunately, they too proved difficult to scale and incredibly complex to maintain and manage, requiring developer resource that wasn’t always available. What’s more, they did nothing to solve ongoing collaboration issues, and offered no overarching machine learning capabilities. That left our data analysts to manually spin up local notebooks for data aggregation and machine learning in silos. What we needed was a new platform with transparent workflows so our team members could work efficiently together to solve our client’s data problems.

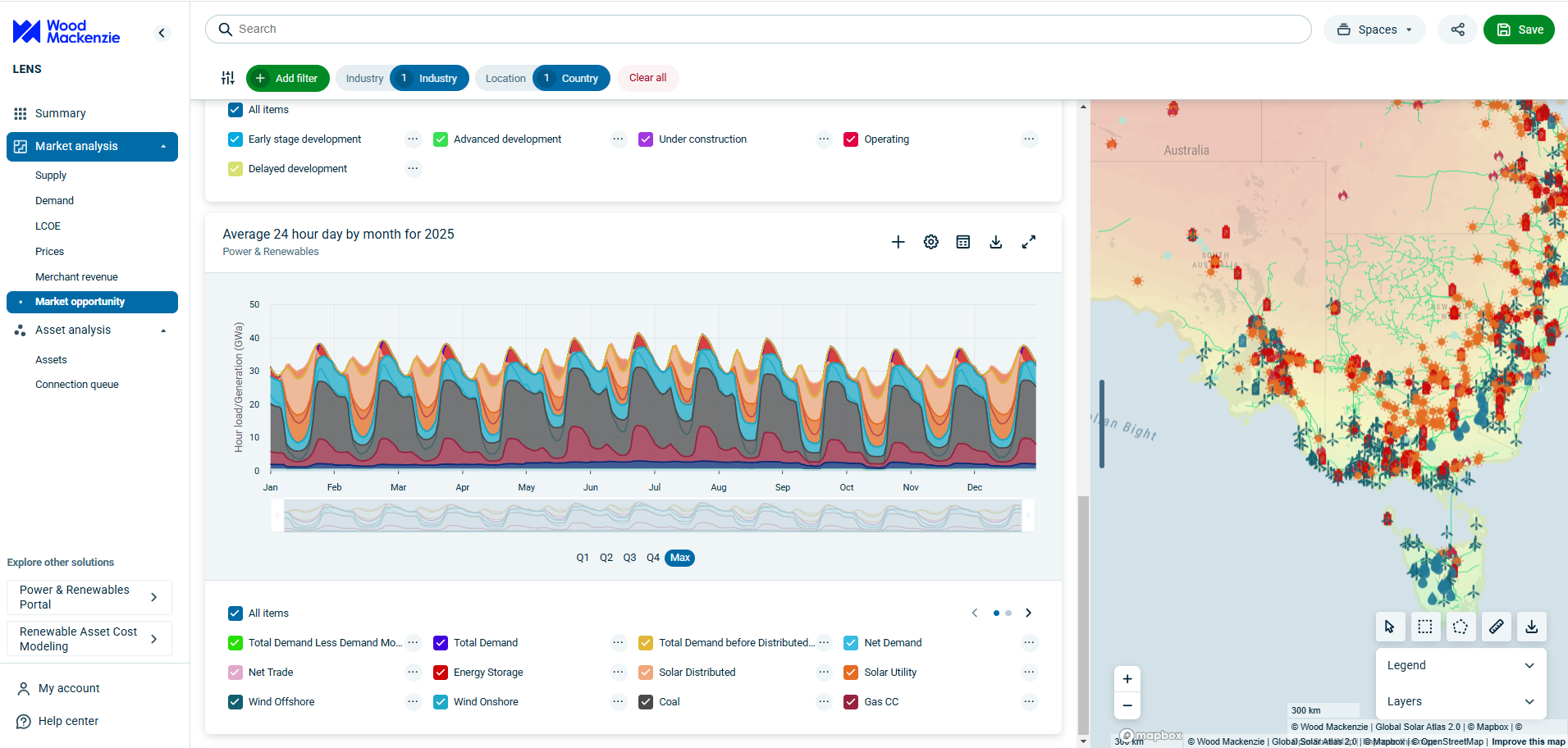

One of Wood Mackenzie’s core offerings is our proprietary enterprise data analytics platform Lens. Built to deliver insights at key decision points in end-to-end workflows, it also leverages APIs to integrate network data into our customers’ unique systems, powering both business intelligence and performance. To work effectively, Lens requires high data fidelity, seamless integration of clients’ applications and a scalable and sustainable data ETL (extract, transform and load) process.

We therefore needed to upgrade our infrastructure, not only to break down the silos across our data and team, but to ensure workflows performed effectively and efficiently.

The solution: enriching data for more accurate decision-making

Our new native architecture, which incorporates the Databricks Lakehouse Platform, provides a unified view of all our data for analytical and machine learning workloads. Through the democratisation of our data, it is designed to allow our data teams to manage data effectively and efficiently at any scale.

Our team has now been building out and using the new architecture for nearly two years, with a primary focus on continually enriching data with analytics and increasing transparency. Today, all core data tables are fully visible and trackable. We have also developed a patent-pending technology with a distributed framework to partition data by KDB tree for Neighbour Discovery, along with AI technology to improve data quality and provide data insights for the millions of oil and gas digital assets we cover. Our architecture also leverages the open-source storage framework Delta Lake and open-source machine learning platform MLflow for data and model management. This allows us to push clean, complete data to the Lens platform so that clients can query it directly.

We have placed a premium on workflow transparency, ensuring that whoever needs to see where data is coming from, where it’s going, and how it’s being processed can do so quickly. We use shared notebooks to drive meetings, explore data as a group, share models and insights and comment on each other's work. The increased collaboration this allows has driven a noticeable reduction in manual errors and time spent per project. Finally, we have also developed clear dashboards to monitor data quality more effectively for completeness and timeliness using Databricks SQL.

The outcome: solving today’s challenges with the future in mind

Our new approach is driving real business benefits both for our clients and our team. These include:

- Increased data accuracy, timeliness and completeness, with significantly faster data delivery to customers

- Vastly improved productivity and process completion, driving a new culture of collaboration and innovation

- Value added for our customers through new insights that were previously impossible

Our new architecture, supported by workflow and big data processing tools from Databricks, has allowed us to focus more squarely on broadening our overall analytics structure. We continue to evolve our use of the platform, adding much-needed transparency and flexibility to our data operations to further drive efficiency and productivity. That has freed up our time to deal with the logistics of running routine workflows, such as using repair and rerun capabilities; for example, it’s helped us cut down the length of our workflow cycle by continuing job runs after code fixes without having to rerun previous steps completed before the fix.

Now that we have high quality, reliable data in place, our team is also starting to leverage AI technology to gain more data insights. This will help us share relevant data and trends with our customers, connecting the right data to the right people even more quickly and easily.

We can now react more nimbly to changing business needs, market disruptions, industry fluctuations and specific customer inputs. That enables us to deploy data both internally and externally in a way that facilitates better decision making, machine learning innovations and the fine-tuning of strategic considerations for our clients as they navigate the energy transition.